June 17, 2024 – A recent study reveals that an increasing number of people are finding it difficult to distinguish between GPT-4 and humans in the Turing Test.

The Turing Test, also known as the “Imitation Game,” was proposed by computer scientist Alan Turing in 1950. The criterion for the test is whether a machine can engage in a conversation like a human, making the other party mistakenly believe it is a real person.

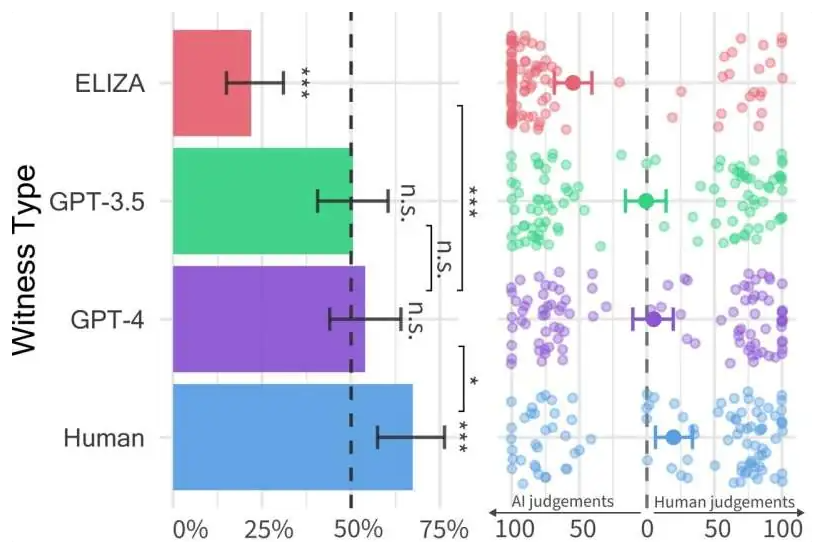

Researchers from the University of California, San Diego recruited 500 participants for a five-minute conversation with four “interlocutors”: a real person, ELIZA (an early chatbot from the 1960s), and GPT-3.5 and GPT-4, which power the chatbot ChatGPT. After the conversation, participants were asked to judge whether the interlocutor was a human or a machine.

The results, published on the preprint website arXiv, showed that 54% of participants mistakenly identified GPT-4 as a real person. In comparison, only 22% of people thought ELIZA, with its preset responses, was a real person, while 50% thought GPT-3.5 was human. The human interlocutor was correctly identified 67% of the time.

Researchers point out that the Turing Test may be overly simplistic, and conversational style and emotional factors play a more significant role in the test than traditional intelligence cognition.

Nell Watson, an AI researcher at the Institute of Electrical and Electronics Engineers (IEEE), states that pure intelligence is not everything. What’s really important is understanding the context, others’ skills intelligently, and combining these elements with empathy. Ability is only part of AI’s value. Understanding human values, preferences, and boundaries is also crucial. These qualities make AI a loyal and reliable butler in our lives.

Watson also mentioned that this study poses challenges for future human-computer interaction. People will increasingly doubt the authenticity of the interactive objects, especially when sensitive topics are involved. At the same time, this study highlights the significant progress of artificial intelligence in the GPT era.