**August 11, 2023 -** In a remarkable collaboration, NVIDIA has joined forces with the Technion-Israel Institute of Technology, Bar-Ilan University, and Simon Fraser University to unveil groundbreaking research centered around the CALM AI model.

Named as an acronym for Conditional Adversarial Latent Models, CALM AI is positioned to revolutionize the training of tailored virtual characters. The joint endeavor has culminated in a technical paper that introduces a paradigm-shifting approach.

A pivotal highlight of NVIDIA’s breakthrough is its profound time dilation capabilities during training. Astoundingly, a mere ten-day real-world training session equates to a decade-long equivalent in a simulated environment. This leap underscores the incredible efficiency of the CALM AI model in acquiring expertise within a fraction of the conventional timeline.

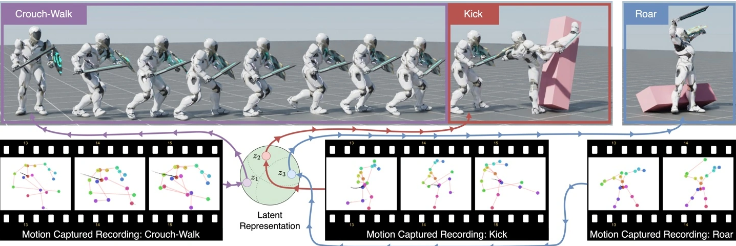

What truly distinguishes CALM AI is its capacity to simulate an astonishing repertoire of five billion distinct human movements. From the subtle art of walking to the dynamic motions of combat, CALM AI spans the spectrum of human kinetics, encompassing postures, locomotion, and even nuanced gestures.

NVIDIA’s innovation extends to the nuanced realm of complexity and diversity intrinsic to human kinetics. CALM AI boasts the remarkable ability to encapsulate the intricate tapestry of human movement patterns, mirroring the heterogeneous nature of real-world motion. This advancement unlocks the potential for virtual characters to embody a new echelon of authenticity.

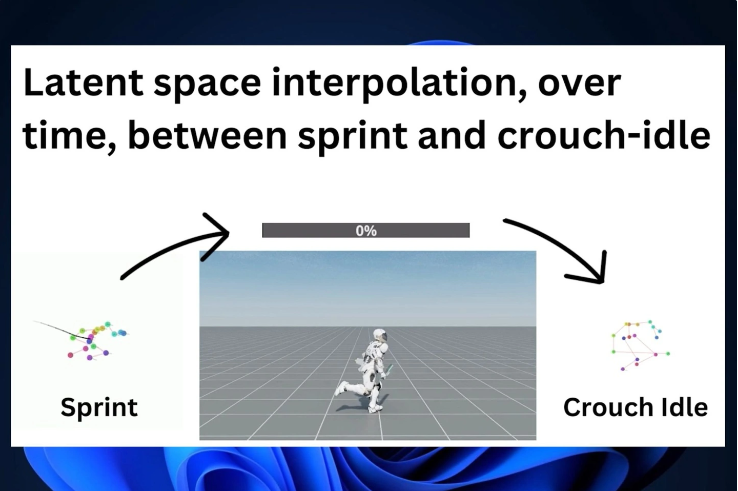

Beyond simulation, CALM AI facilitates direct control over character kinetics. This innovation allows users to shape and orchestrate movement through interfaces reminiscent of those found in interactive video games. This seamless transition from simulation to manipulation bridges the gap between virtuality and reality.

Central to CALM AI’s efficacy is its mastery over semantic motion representation. This proficiency enables the generated motions to seamlessly integrate into higher-level task training, offering a pathway to imbue virtual characters with functionalities that transcend the norm.