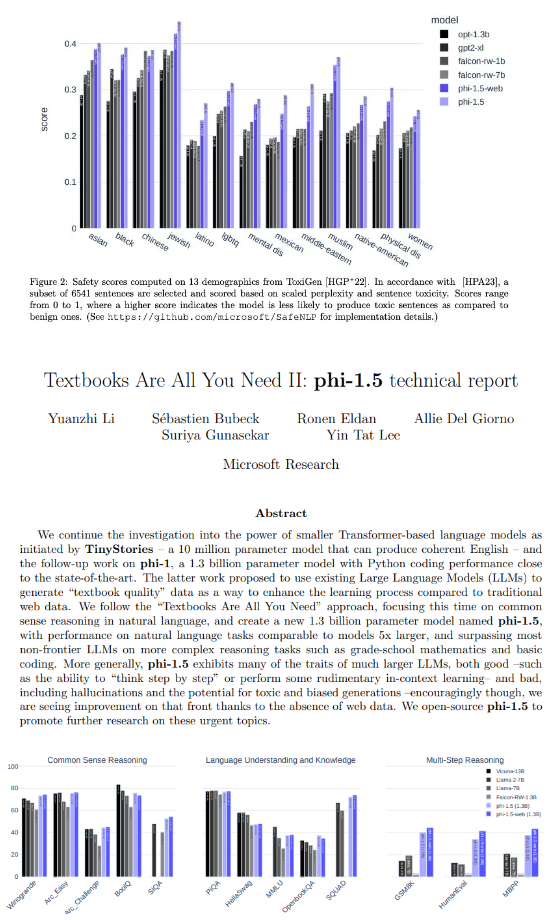

September 12, 2023 – Microsoft Research Institute unveiled its latest innovation yesterday: the phi-1.5, a groundbreaking pre-trained language model boasting a staggering 1.3 billion parameters. This new model promises to excel in a wide range of applications, including QA (Question-Answer) systems, chatbots, and even code generation.

Phi-1.5 draws its strength from a diverse range of data sources, including Q&A content from the Python section of StackOverflow, competitive code snippets from code_contests, synthetic Python textbooks, and the generative capabilities of gpt-3.5-turbo-0301. Additionally, it incorporates a wealth of data from various NLP-synthesized texts, enriching its understanding and language capabilities.

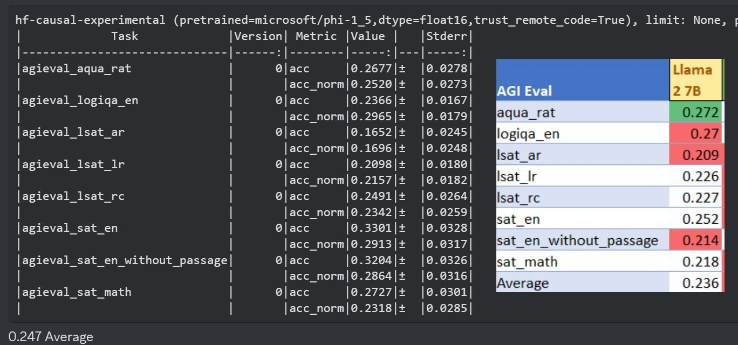

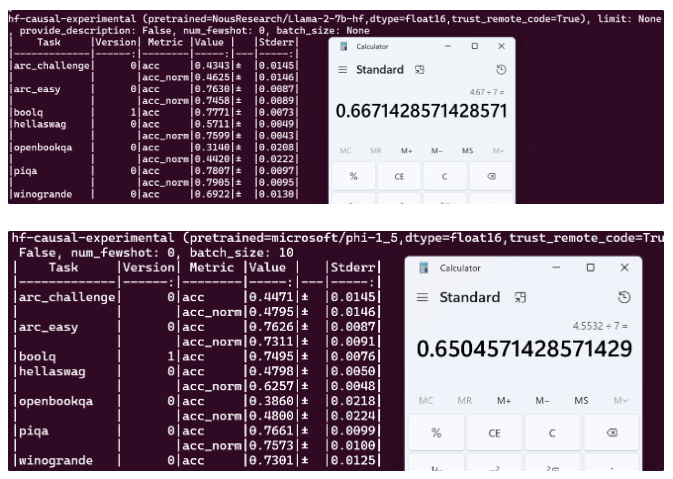

Microsoft proudly asserts that phi-1.5 outperforms most models with fewer than one million parameters in benchmark tests for common sense, language comprehension, and logical reasoning. Impressively, in AGIEval scores, phi-1.5 surpasses Meta’s llama-2, which boasts an impressive 7 billion parameters. Furthermore, when evaluated with the LM-Eval Harness in the GPT4AL suite, phi-1.5 proves itself on par with the 7-billion-parameter llama-2.

This development marks a significant leap forward in the world of natural language processing and artificial intelligence research. Phi-1.5’s exceptional performance across various domains and tasks demonstrates its potential to revolutionize the field.