September 06, 2024 – In a recent technical blog post, Micron announced that its “production-capable” 12-layer stacked HBM3E 36GB memory is now being delivered to key industry partners for validation across the entire AI ecosystem.

According to Micron, the 12-layer stacked HBM3E offers a 50% increase in capacity compared to the existing 8-layer stacked HBM3E products. This allows large AI models like Llama-70B to run on a single processor, eliminating the latency issues associated with multi-processor operation.

The Micron 12-layer stacked HBM3E memory features an I/O pin speed of 9.2+ Gb/s, providing over 1.2 TB/s of memory bandwidth. Additionally, Micron claims that this product consumes significantly less power than its competitors’ 8-layer stacked HBM3E offerings.

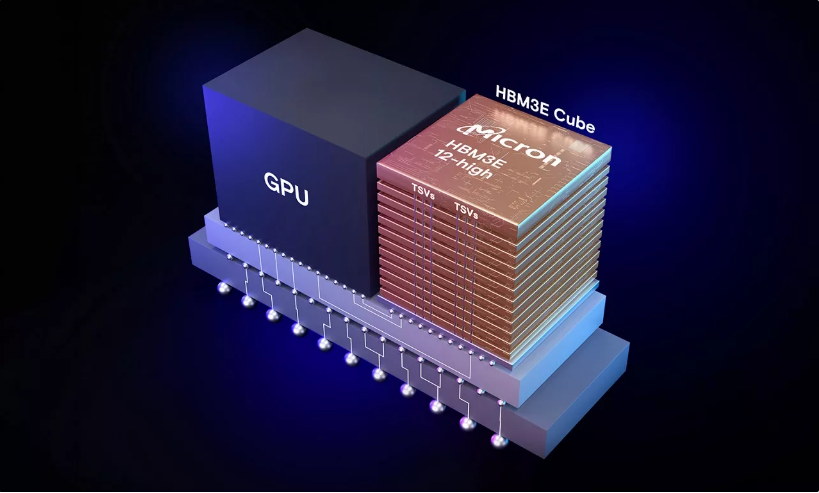

HBM3E is not a standalone product but is integrated into AI chip systems, relying on the collaboration between memory suppliers, product customers, and OSAT companies. Micron is a partner member of TSMC’s 3DFabric advanced packaging alliance.

Dan Kochpatcharin, Director of Ecosystem and Alliance Management at TSMC, recently stated, “TSMC and Micron have maintained a long-term strategic partnership. As part of the OIP ecosystem, we work closely to enable Micron’s HBM3E-based systems and CoWoS packaging designs to support our customers’ AI innovations.”