March 10, 2025 – Google has announced the launch of Gemini Embedding, a cutting-edge AI-based text processing model that has been integrated into the Gemini API. According to the company’s blog post, this model has emerged as the top performer in the Massive Text Embedding Benchmark (MTEB), surpassing competitors such as Mistral, Cohere, and Qwen.

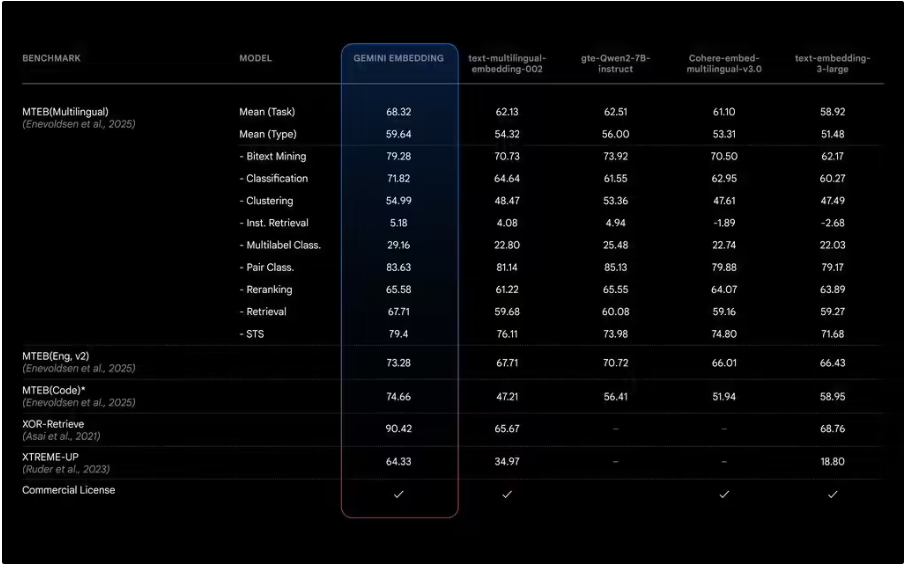

Gemini Embedding converts text into numerical representations (vectors), enabling a range of functions including semantic search, recommendation systems, and document retrieval. Its remarkable performance in the MTEB benchmark, with an average task score of 68.32, significantly higher than models like Linq-Embed-Mistral and gte-Qwen2-7B-instruct, has established it as a State-of-the-art (SOTA) AI model.

In the MTEB evaluations, Gemini Embedding achieved a score of 85.13 in pairing classification, 67.71 in retrieval, and 65.58 in re-ranking, highlighting its significant advantages in practical applications like AI search engines, document analysis, and chatbot optimization.

Created by Hugging Face, MTEB assesses AI models’ capabilities in ranking, classifying, and retrieving text data through over 50 datasets. As an industry benchmark, MTEB provides a valuable reference for enterprises when selecting AI models.

Gemini Embedding’s exceptional performance not only reinforces Google’s leadership in AI but also lays a solid foundation for its commercial promotion. With its high performance, Gemini Embedding holds promise for a wide range of applications, including enhancing search engine results, improving multilingual translation and customer service automation, and optimizing Google Cloud-based AI analytics and semantic search functions.