May 21 2025 – During the 2025 I/O Developers Conference, Google showcased a prototype of its Android XR smart glasses, as reported by Android Central today. While the tech giant hasn’t unveiled any sales plans for the device yet, it did announce upcoming collaborations with eyewear brands Warby Parker and Gentle Monster to create stylish AR glasses powered by Gemini AI.

In terms of design, the Android XR prototype closely resembles regular spectacles. It features slightly thicker temples and frames, with a nearly undetectable camera LED that remains hidden until activated. This subtle aesthetic makes it blend seamlessly into everyday wear.

Regarding the wearing experience, the initial impression from Android Central’s hands-on session suggests that Google’s Android XR prototype stands out for its lack of external attachments or cables, setting it apart from competitors like Meta Orion. The comfort level is comparable to that of standard Ray-Ban glasses, though the long-term wearability concerning weight fatigue remains to be tested.

The device employs a single-display approach, with only the right lens equipped with a screen. This design choice, far from being a drawback, enhances usability. Google has strategically positioned the displayed content, ensuring it rarely obstructs the user’s line of sight. The screen only takes center stage during specific tasks, such as photography.

This single-lens setup actually simplifies the fitting process. Unlike dual-display AR glasses, which often require cumbersome adjustments to find the optimal position, the Android XR prototype can be comfortably adjusted in just a few seconds, making this design feature a notable highlight.

Functionally, the Android XR prototype integrates the Gemini multimodal assistant. Users can activate it by pressing a touch-sensitive area on the right temple. During the demo, Gemini demonstrated impressive real-time environmental analysis capabilities. It could identify book content, suggest hiking trails in the San Francisco Bay Area, and even interpret artwork histories and compare themes—all with minimal physical interaction.

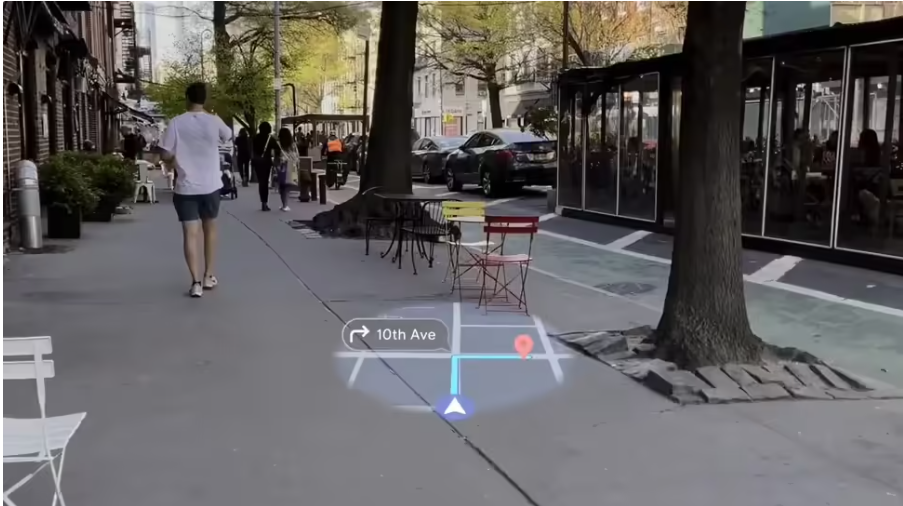

One of the standout features is the integration of Google Maps. The glasses display simple directional arrows and street names when looking straight ahead, seamlessly switching to a real-time map view when the user looks down. Additionally, applications like Calendar reminders and Messages notifications are intuitively displayed, adjusting dynamically based on the user’s gaze direction.

The Android XR glasses are designed to work in tandem with an Android smartphone. Visual data and voice commands are processed by the glasses and then sent to the phone for more complex computations. This approach helps keep the glasses compact and lightweight, though its impact on the phone’s battery life is still under scrutiny.